This is likely to be a relatively contentious post. I've spent a bit of time trying to get to like Android, and, in short, I can't do it. I don't like Android. I'm sure it's OK as a

smartphone OS, but on a tablet, it simply doesn't work for me.

Now, I'm aware that I'm probably not the average user, but I'm (humbly enough) pretty well attuned to what people need to make computers work. That comes from 20+ years in "the biz", using pretty much every operating system there's ever been, including some real wierdos that most people haven't even heard of.

Now, to start hammering on what Android's got wrong, we really need to look at a comparable system that's got it *right*. And it needs to be something I have near me, so out comes the trusty Newton. Yep, that's right. The Newton. A 13 year old, underpowered "pocketable" that was ridiculed in its earlier incarnations for its pisspoor handwriting recognition. Android's gotta be better than that, right? Read on for a load of words on why I think the way I do, but the executive summary is "No, wrong".

Philosophy

Before we get to the "nitty gritty" of little things, we need to look at the design rationales of the systems, or, for want of a better phrase, their "philosophy".

Newton came from a real intention to make a computer that was different, that was better. A radical shift from the 100lb hulking monoliths that lived on or under our desks at the time. A powerful computer that could be dropped into your pocket. A computer that anyone could use. A computer that didn't need a keyboard. It may have been a commercial failure (it cost Apple a lot of money at a time it couldn't afford it), but opened a whole range of new business markets and the whole concept of a pocketable "real computer" made a lot of money for other people.

Android comes from Google. In short, it can be summed up as a device for getting more eyes to Google's primary business, i.e. advertising. Google want to hit a new market (originally smartphones), so they leverage a bunch of open source software and try to make something that looks like what the current market leader (iPhone, in this case) looks like, and dump out a beta. Being "open" means that the hardware manufacturers can make it work on their chips, being free means that the carriers don't have to pay license fees, and being free means the consumer gets a cheaper device. Everyone wins, right? Well, in reality, not quite. Hardware manufacturers have mainly made *one* version work for *one* generation of chips, and have often not lived up to the GPL requirements so that their initial work could be carried on by the public. Carriers couldn't care less about updates, because they'd rather sell you a new phone with a new contract, and realistically, it's hardware costs that drive the pricepoint anyway. Net result is a messy market with a ~33/33/33 split of the 3 current major versions of Android, and most platforms not being upgradeable.

Then along came iPad, and with it a sudden rush of android-running tablets. Because a tablet is just like a scaled-up smartphone, right? Again, "No, wrong".

The problem here, I think, is that Android doesn't really fit in with the use case for a tablet device, and particularly not for the pocketable type of tablet. At least, not for *my* use case, but it's my post so I'll stick with my requirements, thank you very much. The iPad succeeds because it's a tightly controlled device with a tightly controlled market, aiming at

consumption of media. It's not a general purpose computer, really - apps live in their own little walled gardens, and you can't run what you want on it. It's a fucking good little gadget, though.

Android thinks it's a phone. Except when it thinks it's an iPad. it dosn't have the same overall control that the iPad/iPhone has, and where Google have tried to enforce certain hardware requirements, they often don't make sense (for a phone *or* for a tablet) except when you look at it from an advertising

pusher's point of view. I mean, really, why does a phone, or a tablet,

need a GPS? Sure, it might be

useful, but I fail to see the absolute necessity. Why restrict to a specific set of screen sizes? Why

must it have a camera? After all, anyone who cares about the photos they take won't be using a phone camera anyway. but I digress slightly.

To sum it up: IMO, android is a "me too" iPhone/iPad clone, and not a very good one. It suffers from Google's "permanent beta" mania. Get it out fast and dirty, and fuck the early adopters.

User interface

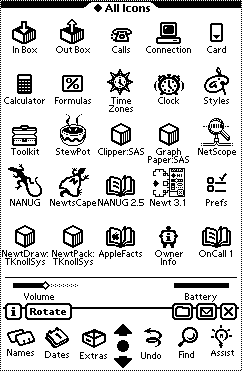

The overall user interface of Newton is *tight*. It's intended to be used with a stylus, although most selections can be made with a finger. Here's what it looks like, more or less (in reality, it's a lot more "green"):

What we see at the bottom of these two screens is the stuff that's always available, viz: a "menu bar" for the current application, and a "Dock" (to use OSX terminology) for a few common apps and functions (undo, find, assist, more on these later). That uses up a fair amount of available screen space, but the rest belongs to your app. What's important here is that the interface is consistent. From the supplied calendar application to 3rd party web browser, you always know where to go.

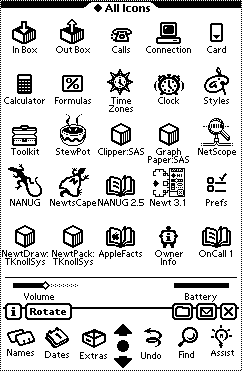

Here's another one, with an "add-on" backdrop, and rotated into landscape mode (the green is a little overbearing, probably as it was pulled from an emulator)

The little star at the top of the screen is the "notification" icon for Newton. What Android mainly uses its waste of space "status bar" for.

Android is chaotic. It's mainly intended to be used with a finger, but that doesn't always work. One app might grab full screen and require some sort of gesture to pull up menus, another leaves menus and stuff on screen. Mostly apps leave the status bar at the top of the screen, but it's pretty much a waste of space - there's very little you can do with it. Admittedly, most apps respond to the "menu" button, but not all devices

have a hardware menu button. Menu widgets don't seem to be standardised, and some are so small as to be unselectable without using a stylus. If nothing else, it's more food for the "open source can't do UIs" crowd.

Scrolling is standardised on both platforms - on Newton you use the up-down arrow keys, on Android you "swipe". I find that my swipes are taken as clicks or some other UI action about 50% of the time, and although the "inertial" scrolling thing looks cool initially, it's intensely frustrating as your swipe goes that bit too far and zooms waaaaaaaay past what you were looking for.

Closing apps is more or less standardised - the Newt has a little X at the bottom left corner, that closes your app. Android recognises the "back" and "home" buttons, but, again,

not all devices have them.

Rotation hits some little glitches, as well. Android assumes you have an accelerometer, and auto-rotates the screen to fit. That's nice, as long as you have an accelerometer. I don't, and there doesn't seem to be any standard "rotate" widget as per the Newton. Which is not to say that the Newt is perfect in this respect, some apps behave very badly when rotated. I would argue, however, that Android should at least provide a widget as a sop to those who don't have an accelerometer.

Finding your data

Newton has a "find" button. It works. It

always works. 'nuff said.

Android has a filesystem. A filesystem that you can't access without some sort of file browser. I have 3 on my device already - one works for one thing, one works better for another, and so on. Gah.

I don't want to fuck about with filesystems, dammit!Otherwise, you must find your data from

within the application you want to use. Interfaces vary. It's messy. It's inconsistent.

This reveals another philosophical thing. Newton doesn't have a "filesystem" as such. No hierarchy, no structure. A big searchable "soup" of data and applications. Everything is data, everything has a bunch of attributes, you can search it. It's a database. While this is somewhat shocking to those used to the current way of doing things, it's hardly new : "Pick" did this in the 60s, BeOS did this to a certain extent, so did Apple to a very limited extent with the "classic" MacOS (and also with OSX).

The current "hierarchical" way of doing things is massively backwards, and it's holding us back. We use the name and location of a file to indicate its metadata. This makes no sense (as, for example, those who've been hit with Windows-based trojans and email viruses can testify). It works for certain system-level applications, but for user data manpulation, it's utter pants.

Currently, this need is covered to a certain extent inside individual applications (think, for example, iTunes), but that data can't be easily shared to other apps without intrinsic knowledge of how to "get at" the database, and upgrades to one app often break other stuff further down the chain.

It would be much nicer to simply have something where you could query aong the lines of "show me all the emails I sent to fred over the last month, sorted by date". "Okay, now show me the emails in that list which had attachments". "Now show me the attachments which pertained to project 'foo'". Etc etc.

It's about time someone came up with a filesystem that is completely non-hierarchical (I'm currently working on a fusefs that does exactly this, actually).

App launching

On the Newton, click on the "extras" button, go to the tab for stuff *you* have classified as applications, click your app. Or use "find". Or click on an associated piece of data. Or you might use "Assist".

On Android, go back to the home screen, go to the right tab, click your app.

To me, the whole "application centric" way of doing things smacks of "desktop computing metaphor crammed into a handheld device" with little thought. But maybe that's just me.

Data input

With Newton, you write on the screen. That's pretty much it. Really. Just write directly in the boxes. Newton

usually turns it into a textual representation of what you wrote. Special characters and all. No special voodoo ways of writing a la "graffiti" on the Palm. Made a mistake? Scribble it out, it goes away. Or you can opt to have your text remain as a set of handwritten strokes, and have it recognised later (very handy for note taking in meetings, as the "usually" above implies - Newton's HWR doesn't always get it right, and might need some nudging in the right direction, which you can't do when scribbling at full speed).

Alternatively, there are on-screen keyboards, or, in extremis, the newton serial keyboard, which is very handy for programming direct on the device.

With HWR, you get around 20-30wpm, about the same as a *fast* typist on a good keyboard. With OSK, 3-10wpm. The newton keyboard is a bit craptacular though, and you can count on about 15-20wpm using it, along with cramp in the fingers.

With Android on a tablet, you don't have much choice. It's on screen keyboards pretty much all the way unless your device supports hardware keyboards. For standard OSK with prediction, I got about the same as on the Newton (the prediction side of things only seemed to be able to predict one word - "android"). I've heard of 10-15wpm with predictive OSK and a fast OSK replacement you're trained to use (Swype, for example) - about as fast as a "slow" typist. With more training you might get a bit faster.

HWR is good technology. It's fast, and discreet (speech recognition has the potential of being fast, but makes you look like a git, and you can't easily use it in meetings or noisy environments). If you want *really* fast, shorthand recognition could get you into massive wpm speeds - at least as fast as speech and maybe faster. The hardware can do it (my cheapo chinese tablet is, at a conservative guess, 6-10 times as fast as the Newton without taking into account the vector unit and GPU). Fast, accurate HWR is no idle dream.

There's other failings with Android's input methods: they hide the entire screen when you're using them, so you have no context - try entering data into a form, and you'll be given a "next" button indicating that entering the current data will take you to the next form field, but

there's no indication of what this, or the next, form field actually are. Barking bloody mad, that is. Maybe it's fixed in Froyo.

Inter-app communications

Nothing standard under Android. Some apps share, some don't.

This is more or less the system under Newton as well, but it also has "Assist", and that's available to all apps. Yep, that button that looks like a question mark. What does it do?

From some app, maybe a word processor, scribble "send to Mark", and select it. Hit "assist". Newton goes off and looks, finds that Mark might mean 2 people, and that sending might mean faxing or emailing. Asks who, and how. And then goes off and does it. "Call Fred", Assist, and the telephone dialer fires up, dials the number, and then puts a call logger up, allowing you to scribble notes whilst chatting.

"Assist" is mad cool, and it was available in the early '90s.

The built in apps under Newton *do* share data, and the interface for sharing that data back and forth was available to other developers' apps. the same is more or less the case under Android, although it's hard / impossible for a developer to shoehorn additional stuff into an existing app (as could be done under Newton) without modifying and recompiling.

Synchronisation and so on

The Newton synchronises nicely with desktop apps via serial, ethernet, wifi, bluetooth. As long as you have a sync app, of course. They're pretty hard to come by these days, and it's getting difficult to use a newt with a "modern" desktop OS. You now need need 3rd party software to sync with a Mac, but I'll give the Newton a pass on that as the OS the Apple-supplied software ran on hasn't been current for nearly 10 years. Dunno if the windows sync stuff works on Vista/7, but it certainly ran on XP. 64 bit might be what fraks it totally.

Android mainly syncs, as far as I can tell, with "the cloud", which can largely be considered a euphemism for "Google's ads". Well, frankly, fuck the cloud. Desktop syncing is payware, so fuck that too. It really shouldn't be something one needs 3rd party software for - there *should* be some sort of extendable conduit for syncing - hell, OSX provides most of that anyway.

Other stuff

Let's be honest, Android does have a bunch of stuff it can do waaaaay better than the Newt. Video and audio playback is potentially loads better, mainly down to 12 years of additional hardware development. My cheapo tablet has more hardware potential than a top-of-the-range laptop from the Newton era, after all. That said, video playback under Android leaves a lot to be desired. the hardware should have the power to do NLE on video, and in most cases you can't even play stuff back unless it's in a specific format with a specific size. You can't assume codecs are there. A mess, in short.

The web experience on Android is loads better than on the Newt, too, even if it can't easily do embedded flash video (something I heave a sigh of relief over).

eBooks look nicer under Android. Higher DPI, colour screen. Fine. PDFs don't work at all under Newton, so even having an option to read badly-rendered PDFs slowly under Android is a blessing. That applies to 3rd party readers - I've not used the Adobe reader for 2 reasons:

- On the desktop it's a pile of bloatware crap

- My android device doesn't have Google Market, so I can't get to it.

Photos, idem. Colour screen, higher DPI. Win for Android by default and the inexorable march of progress.

One area the Newton wins on is startup. From "press of button" to the "Happy Newton" chime and a usable device is measured in single digits of seconds. Android takes an age. In addition, the Newton comes back to *exactly* where you were when you turned it off or the batteries ran out, no matter how much time has passed between the two. No data loss, nothing. you're back. No finding files, you're still there. In 15 years of using Newtons, I've *never* lost data (not something I can say of PalmOS devices, for example).

Wakeup - I can't compare. Newton is instant, but my tablet doesn't seem to have any PM stuff enabled, so I don't know if it works or not. I have to hard power cycle it. Sucks, but probably not an Android flaw as such.

As far as gaming goes, Android is more modern. But 99% (or more) of the "games" I've found so far available under Android are made of suck. A lot of this is down to Google's utterly braindead decision to use Java, of all things, as a systems programming language. I mean, really. Gaming suck on a platform that uses a garbage collected langauge with no JIT? Who'd ever have thought it?

And finally, performance.

I have in front of me 2 ARM devices.

One has a 162MHz StrongARM 110 processor, giving 1 DMips/MHz. It has 4MB of RAM and 4MB of flash, with 16MB of additional flash in one of the PCMCIA slots for a total of 20MB *total storage*. It's running a 12 year old, interpreted, prototype based, language.

The other has a 600MHz Cortex-A8 processor, which gives 2 DMips/MHz, and has, in adddition, a vector floating point unit, NEON FP extensions, and an OpenCL-enabled GPU. Even without using the addons, it's nearly *10 times* as fast as the Newton. It has a 13 stage superscalar pipeline and more cache than the Newton has main memory. It has 256MB of Main memory and 2GB of flash, with another 8GB of flash in the SD card slot for a total of 10GB storage. It's running software that's mainly written in Java. Oh, did I mention that the processor itself is optimised for Java?

Guess which one feels "snappy"? Yep, you're right - the hardware specs don't make up for the software implementation.

The "feel" of Android 2.1 makes me believe that the vaunted 450% speedup of Froyo's improved Dalvik are probably not overstated. IUt also makes me wonder why, if there was that much speedup to be had, why it wasn't "had" before initial launch of Android. And how much more there is to be had under the hood. Android's been out for over 2 years now; I'm hardly an early adopter.

No, really. Four hundred and fifty fucking percent faster. What the fuck?